Google Summer of Code: Creating Training set.

I describe a process of the creating a dataset for training classifier that I use for Face Detection.

Positive samples (Faces).

For this task I decided to take the Web Faces database. It consists of 10000 faces. Each face has eye coordinates which is very

useful, because we can use this information to align faces.

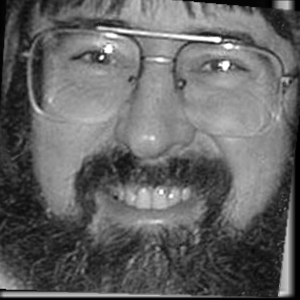

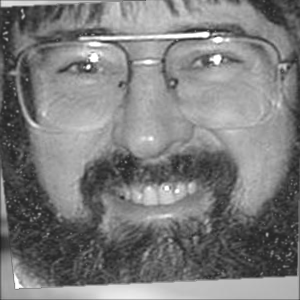

Why do we need to align faces? Take a look at this photo:

If we just crop the faces as they are, it will be really hard for classifier to learn from it. The reason for this is that we don’t know how all of the faces in the database are positioned. Like in the example above the face is rotated. In order to get a good dataset we first align faces and then add small random transformations that we can control ourselves. This is really convinient because if the training goes bad, we can just change the parameters of the random transformations and experiment.

In order to align faces, we take the coordinates of eyes and draw a line through them.

Then we just rotate the image in order to make this line horizontal. Before running

the script the size of resulted images is specified and the amount of the area above and

below the eyes, and on the right and the left side of a face. The cropping also takes care

of the proportion ratio. Otherwise, if we blindly resize the image the resulted face will

be spoiled and the classifier will work bad. That way we can be sure now that all our faces

are placed cosistently and we can start to run random transformations. The idea that I described

was taken from the following page.

Have a look at the aligned faces:

As you see the amount of area is consistent across images. The next stage is to transform them

in order to augment our dataset. For this purpose we will use OpenCv create_samples utility. This utility takes all the images and creates new

images by randomly transforming the images and changing the intensity in a specified manner. For my purposes I have chosen the following parameters -maxxangle 0.5 -maxyangle 0.5 -maxzangle 0.3 -maxidev 40. The angles specify the maximum rotation angles in 3d and the maxidev specifies the maximum deviation in the intesity changes. This script also puts images on the specified by user background.

This process is really complicated if you want to extract images in the end and not the .vec file

format of the OpenCv.

This is a small description on how to do it:

- Run the bash command

find ./positive_images -iname "*.jpg" > positives.txtto get a list of positive examples.positive_imagesis a folder with positive examples. - Same for the negative

find ./negative_images -iname "*.jpg" > negatives.txt. - Run the

createtrainsamples.plfile like thisperl createtrainsamples.pl positives.txt negatives.txt vec_storage_tmp_dir. Internally it usesopencv_createsamples. So you have to have it compiled. It will create a lot of.vecfiles in the specified directory. You can get this script from here. This command transforms each image in thepositives.txtand places the results as.vecfiles in thevec_storage_tmp_dirfolder. We will have to concatenate them on the next step. - Run

python mergevec.py -v vec_storage_tmp_dir -o final.vec. You will have one.vecfile with all the images. You can get this file from here. - Run the

vec2images final.vec output/%07d.png -w size -h size. All the images will be in the output folder.vec2imagehas to be compiled. You can get the source from here.

You can see the results of the script now:

Negative samples.

Negative samples were collected from the aflw database by eleminating faces from the images and taking random samples from the images. This makes sence because the classifier will learn negatives samples from the images where the faces usually located. Some people usually take random pictures of text or walls for negative examples, but it makes sence to train classifier on the things that most probably will be on the images with faces.